The BlockRank Paper signals search’s next leap: From synthesis towards comprehension.

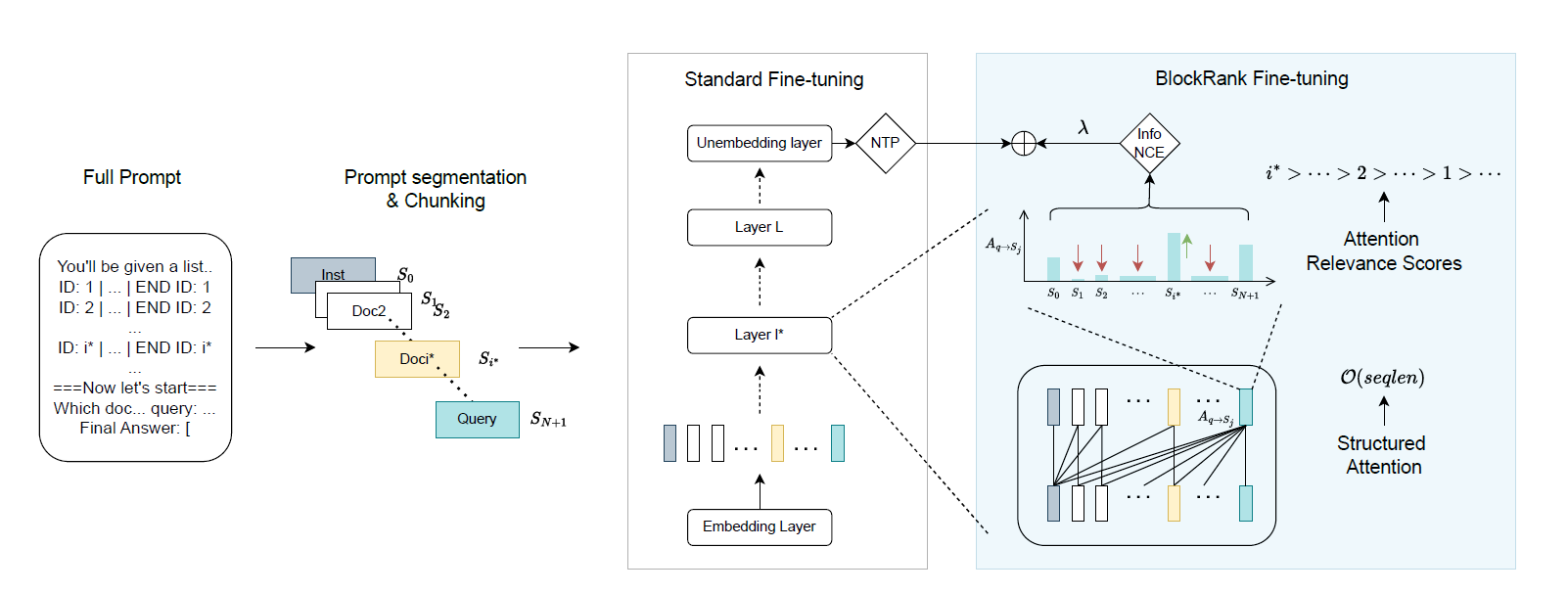

Google DeepMind has published research that signals the next stage of search. The paper – Scalable In-Context Ranking with Generative Models – describes a breakthrough in what’s known as In-context Ranking (ICR).

This is a system where the model reads and evaluates content directly, identifying the most relevant document(s) by processing the query and all candidate documents simultaneously.

You can read the technical detail in the paper here. But from a content perspective, the practical implication is that ranking is evolving into an act approaching genuine comprehension.

AI or more specifically large language models (LLMs) will no longer simply assemble answers from sources that look credible – they will read and compare them, assessing which most directly and convincingly addresses the query’s intent.

AI as synthesiser

Search today positions AI as a synthesiser. In this paradigm, AI doesn’t judge which source is ‘best’ – it blends multiple partial answers into a composite response.

In Google’s AI Mode and AI Overviews, queries fan out into multiple sub-queries to probe intent from various angles, retrieving and fusing fragments into adaptive, conversational answers.

This demands content with depth, clarity, structure, and authority – structured long-form pieces with precise entity definitions already outpace thin keyword-stuffed pages in AI citations, even from lower-domain sites.

AI relies on material that endures scrutiny: comprehensive enough for multi-angle coverage, organised for easy reassembly, and authoritative to build trust in dynamic dialogues.

Such content cycles through the synthesis loop, as models favour interpretable sources they can cross-reference and reuse – prioritising conceptual depth and credible frameworks over superficial takes.

AI as comprehender

If the synthesiser model is defined by blending answers, the next stage is defined by judging them. This is the shift BlockRank enables.

In-context Ranking (ICR) was possible, but the cost of comparing every document against every other made it computationally impossible at scale.

BlockRank makes that viable. By restructuring how attention works, it teaches the model to focus where it matters – each document in relation to the query, not to every other document. The query becomes the lens, scanning across all candidates to see which aligns most closely with its intent.

That architectural shift – simple but profound – turns this type of machine comprehension into something that can run fast enough for real-world search.

The result is a model that doesn’t just assemble responses; it compares them directly. While In-Context Ranking doesn’t apply logic or reasoning in a human way, it increasingly approximates how people judge content – by reading multiple sources side-by-side, detecting contextual fit, and rewarding clarity and completeness over surface-level relevance.

This is the real shift: from inference to understanding – from signals about content to signals within content.

Machine-readable authority

As AI shifts from assembling to judging, visibility now depends on machine-readable authority – how well your knowledge can be parsed, verified, and trusted within the model’s own reasoning.

This is the function of Dual-Intelligence Architecture (DIA): a framework for communicating across two layers of understanding:

Upstream – machine comprehension.

Authority is established here. Internal logic, explicit entity mapping, structure, schema, and cited evidence form a clear chain that teaches the machine your subject expertise and authority. Content organised this way lets models evaluate relevance directly within context and treat your work as a primary source.

Downstream – human engagement.

Once upstream signals are clear, that understanding propagates into AI answers, snippets, and recommendations that shape what people read, trust, and act on.

Practical takeaway: build upstream for machines so downstream human visibility and engagement can follow.

Signals still matter, but content determines the rank

External signals like backlinks, authoritative mentions, and brand credibility aren’t disappearing. But their function is shifting.

In traditional search, signals guided ranking from start to finish – discovery, evaluation, and final placement all relied on these external markers of quality.

With comprehension-based systems like BlockRank, their role ends much earlier in the process. Signals create the initial candidate pool – determining which content gets considered – but then the baton passes to the LLM itself. The final ranking decision comes from direct evaluation: the model reading, comparing, and judging content substance.

Signals get you into the evaluation. Comprehension determines where you rank within it.

That handoff is why content structure matters more than ever.

Build for comprehension now

BlockRank isn’t live yet, but the direction is clear. The brands building for machine comprehension now – structured depth, verifiable claims, entity precision – are positioning for the moment AI stops inferring quality and starts reading it directly.

That moment is closer than most realise, and it will require a shift in content strategy.