Don’t chase lagging signals – shape the conversation and become the primary source.

As AI search reshapes online discovery, there’s a proliferation of new tools and tactics promising to show exactly which prompts your content appears in and how you should optimise for them.

The workflow is seductive: audit prompts, find the gaps, and create content to plug them. In practice, many teams end up chasing lagging, fragmentary signals from past queries.

The real question: does this build authority, or just tune to dashboards?

The reactive model: The trap of probabilistic gap-plugging

The ‘prompt-plugging’ model is shaping up as a kind of ill-fitting keyword research 2.0. While appealing because it is measurable, methodical, and looks data-driven, it creates a strategic trap: misapplying a deterministic tactic to a probabilistic system

Traditional keyword SEO worked because search was largely deterministic – you could check a query and reliably see your rank within Google’s standardised search engine results page (SERPs), so granular optimisation made sense.

AI search is probabilistic – the same prompt can return different answers depending on the model, phrasing, user personalisation, location, and the conversation leading up to it

Trying to ‘win a prompt’ at this granular level is optimising for a moving target. In many ways it’s the tail wagging the dog – letting a single, fragmentary readout steer your editorial choices.

Crucially, the content it yields isn’t built to generate real-world authority signals because it’s fundamentally reactive. It doesn’t challenge assumptions, or introduce new ideas that get people talking. It optimises for a narrow, fragmentary readout of queries that already exist, so the output often reads like a product description or user manual – functional but sterile.

Worse, this makes it the perfect target for AI-driven cannibalisation. Because it contains no original ideas, the AI can summarise it and present the answer directly, often without citing your page at all. Agenda-setting content, by contrast, introduces novel concepts, guidance or frameworks that AI systems are compelled to cite to validate their own answers

Content that only responds to what’s already been asked will struggle to set an agenda. It creates a commodity that neither defines nor differentiates your brand to AI, and doesn’t get shared, cited by media, or spark human-to-human conversation. It’s a maintenance tactic, not an authority-building strategy.

The proactive model: Agenda-setting and entity authority

The strategic alternative is the agenda-setting model. It avoids the trap of reverse-engineering content from past AI fragments. Instead of just ‘filling in blanks,’ the premise is to be proactive: create the original, agenda-setting content that helps define what questions get asked in the subject topic in the first place.

This doesn’t need to be overwhelming, and it doesn’t have to be front-page newspaper type content. It rests on Google’s E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) principles – and is essentially grounded in your company’s real-world operations and in-house knowledge.

It’s about unearthing and articulating your unique perspective in your niche to offer something new and useful to your target audience. This applies at any scale – from finance regulation in Australia to the benefits of using natural materials over plastic in the manufacturing of caravan annexes in the tropical north.

When you use this expertise to shape the conversation, you generate real-world authority signals: citations in relevant industry blogs, mentions in local newsletters, or interviews on sector podcasts about your ideas or framework.

These are leading indicators that AI systems use to judge who is truly authoritative on a topic – a sharp contrast to the lagging indicators from prompt-plugging.

That real-world authority is the foothold. From there, you build depth and breadth, scaling that single idea into a true authority corpus – a structured long-form article or linked series that functions as the definitive resource on your topic.

This corpus is a deliberate architecture that intertwines your new, original frameworks with the broader, established expertise in your field.

This process demonstrates to the AI the full context of your authority – showing it how your new ideas connect to the existing AI knowledge graph and establishing ownership of a topic niche.

Market validation: The return of editorial authority

This isn’t just a theory; the market is already voting with its dollars. Look at the hiring patterns.

The AI leaders themselves are investing heavily in senior editorial talent:

- OpenAI seeking a Content Strategist (at up to $US 393k/year)

- Anthropic hiring a Managing Editor (up to $US 320k/year)

And this trend is accelerating across enterprise:

- Citi is recruiting a Head of Content Marketing (up to $US 500k/year)

- JPMorganChase an Editorial Director (Salary not specified)

- Zip Co an Associate Director of Content (up to $US 150k/year).

These aren’t peripheral marketing roles; they sit at the core of driving trust and authority. This pattern signals a clear recognition: narrative coherence and human editorial skill are now competitive assets, not nice-to-haves.

Of course, a high-level editorial strategy isn’t new. What’s new is editorial as infrastructure – entity-mapped, semantically linked, with explicit internal logic and hierarchical information, schema-marked, and validated by external signals

As AI systems rely more on trustworthy, structured sources, the companies that can produce this clear, research-backed, agenda-setting material are positioning themselves to win.

The compounding value of authoritative content as AI evolves

The shift we’re already seeing – where AI prioritises deep, structured content for its answers – is not the endpoint. This trend is set to compound as AI technology rapidly evolves.

To understand this trajectory, it’s useful to categorise the two distinct phases of this new AI-driven search.

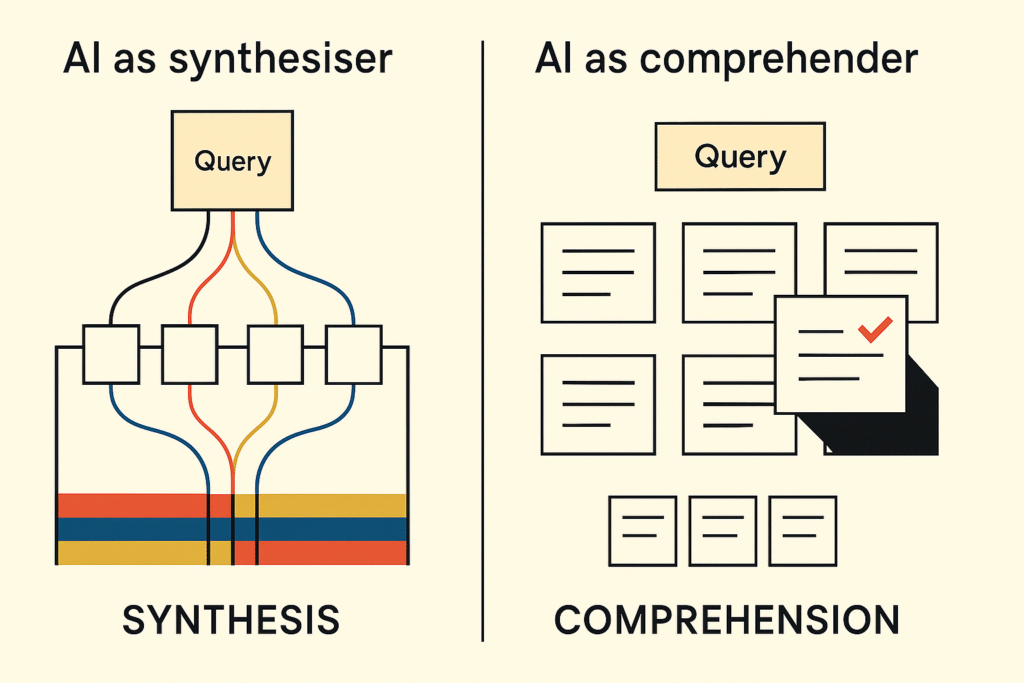

The synthesis era

We’ve moved from the keyword era’s rough proxies into what I call the Synthesis Era – Google’s AI Mode and Overviews fan out a query into sub-queries, pull fragments from multiple sources, and blend answers for ongoing, multi-turn conversations.

Content inclusion remains influenced by external signals (backlinks, mentions, brand), but AI now understands queries as multi-faceted – fanning out to explore angles, nuances, and adjacent concepts across the content landscape. This mass synthesis applies sophisticated semantic assessment, prioritising relevance, rigour, and source concordance over rough proxies like raw backlink volume or keyword presence.

This synthesis demands content with depth, breadth, clarity and structure. Entity-mapped long-form pieces already outpace thin, shallow keyword-focused content in AI citations – even from lower-domain ranked sites.

That’s because their internal logic and coherent frameworks map cleanly to existing AI knowledge graphs, making them reliable building blocks that can be confidently reused across contexts. And as outlined earlier, this same authoritative approach naturally generates the semantic signals (contextual citations, relevant mentions) that AI prioritises.

The comprehension era

We’re now approaching an even more profound shift. Google DeepMind’s ‘Scalable In-Context Ranking with Generative Models’ (BlockRank) reveals the Comprehension Era – where AI directly evaluates content blocks against queries, no longer just assembling from pre-retrieved sources.

This changes everything: AI moves from inferring quality through external signals to assessing the content itself.

In-context ranking (ICR) means AI compares multiple candidates head-to-head, judging their internal logic, evidence quality, and conceptual coherence.

The strategic mandate is stark: External signals get you into the evaluation; internal comprehension decides where you rank.

The authority corpus – with its clear frameworks and original thinking – becomes a recognised primary source. Not just because of what others say about it, but because of what it actually contains.

Measurement and tools: Diagnostics, not directives

Tools that analyse AI prompts and their associated tactics are not useless, but their role must be clearly defined; they should not be used to structure your core content strategy.

Instead, they are valuable in two specific phases:

1. Directional testing

This phase is focused on strategic reconnaissance. The tools are used to ‘test the air’ and see directionally where the AI’s understanding is headed, spotting the broad topics and themes it’s currently prioritising in your landscape. This is probabilistic sensing, not a content brief.

2. Iterative optimisation

This phase is for last-mile polish and light upkeep. It involves applying tools to:

- Guide final, small tweaks to new content before it goes live.

- Inform the ongoing, minor adjustments and consolidations to your existing content.

- Specifically: Tighten headings/summaries, add or adjust FAQs/definitions, fix entity labels and synonyms, add lightweight schema, and verify AI-bot renderability.

Their data is diagnostic radar, not an autopilot. It shows how AI is currently parsing your field, not what you should write next. The leader’s mindset is to both teach and interpret, not just obey, the machine.

Essentially, what needs to dictate content direction is human expertise – both of the subject matter and of the audience it’s trying to connect with.

Define the terrain, don’t follow the trail

Teams racing to ‘win prompts’ are chasing the tail – exhausting, expensive, and ultimately futile. Without an editorial strategy that guides what the brand stands for and where it’s going, they’re just reacting to past fragments.

The companies that will own their categories aren’t asking “Which prompts should we target?” They’re asking “What should we contribute to our field?” They invest in agenda-setting, evidence-backed content that solves real problems. Exactly the type of content AI harvests for its conversational answers.

The brands defining the conversation aren’t filling gaps – they’re building the reference layer of AI search now and into the future.

Learn how to structure your content and website for AI search.